I was asked to set up some Home LAN Minecraft and Pocketmine Servers for my children, so they can play at home and online with their friends in a safe/private environment – I was also interested in the geekier side of setting these servers up and seeing what all the Minecraft fuss is about 🙂

This post covers:

- setting up a “normal” Minecraft Server on Linux

- setting up a Minecraft Pocket Edition Server on Linux

- getting started with the Linux “screen” command to manage multiple Minecraft Server processes

Setting up a normal Minecraft server on Linux (and I’d imagine any other OS) is very easy – you just need Java and the minecraft server jar file which you can get here:

https://minecraft.net/download

And as that page says, just sort out Java then kick off the process like this:

java -Xmx1024M -Xms1024M -jar minecraft_server.jar nogui

Making sure you change the minecraft_server.jar to match the name of the file you downloaded (e.g. minecraft_server.1.7.4.jar or whatever).

I originally put that command in a script and started it with nohup, but realised that you then lose the ability to interact with the process, so I have changed my approach and use the screen command which is much better – see the notes below on how that can be done, it’s easy and very useful.

Also, I think it’s safer to stop your Minecraft server/world cleanly by doing “stop” in the console, rather than Ctrl-c or killing the PID – after doing this I stopped getting these error messages in the console output:

[ERROR] A level 8 error happened: "Uninitialized string offset: 8192" in...

Setting up a Minecraft Pocket Edition server… this was initially quite a contrast to the above, but it got better…

I started off by following the Pocketmine Server setup and installation instructions I found which said to simply download the install script (PocketMine-MP_Installer_XXXXX.sh) and run it:

https://github.com/PocketMine/PocketMine-MP/wiki/Setting-up-a-Server#wiki-linux

but this really didn’t work for me and led to a catalogue of vague errors and a whole load of googling which took me back to the olden times of fighting with make, configure, libraries, conflicts, missing tools and config files and installing all sorts of things based on vague hints from obscure error messages found in cryptic log files… you get the general idea I guess.

It has a simple and happy ending (below), but my experience started off something like this…

don@ubuntuserver:~/pe_minecraft$ ./start.sh 09:43:39 [ERROR] Unable to find the pthreads extension. 09:43:39 [ERROR] Unable to find the cURL extension. 09:43:39 [ERROR] Unable to find the SQLite3 extension. 09:43:39 [ERROR] Please use the installer provided on the homepage.

And that was after running the installer provided on the homepage and checking the dependencies were all there… so I debugged the steps in that script and was then on to the log files… where one thing led to another for quite a while… some of the more memorable ones are…

“Compiler error reporting is too harsh for ./configure (perhaps remove -Werror).”

“configure: error: C compiler cannot create executables”

tried “apt-get install build-essential” to fix that, got a bit further

/usr/include/stdc-predef.h:30:26: fatal error: bits/predefs.h: No such file or directory

tried “apt-get install libc6-dev-i386” to fix that, got a bit further

There were several other issues and no end in sight, and I realised it really shouldn’t be this frustrating, so I looked for another approach.

The Minecraft MP Server code is all hosted on github here:

https://github.com/shoghicp/PocketMine-MP?1358555786480

So I simply downloaded everything from there (you could do “git clone” or “svn export”, but I went for wget’ing the latest zip file):

wget https://github.com/PocketMine/PocketMine-MP/archive/master.zip

extracted that then ran compile :

./src/build/compile.sh

waited a while then ran start:

./start.sh

and this time there were no problems, my Minecraft Pocket Edition MP server was up and running on the home LAN and local devices were able to connect no problem.

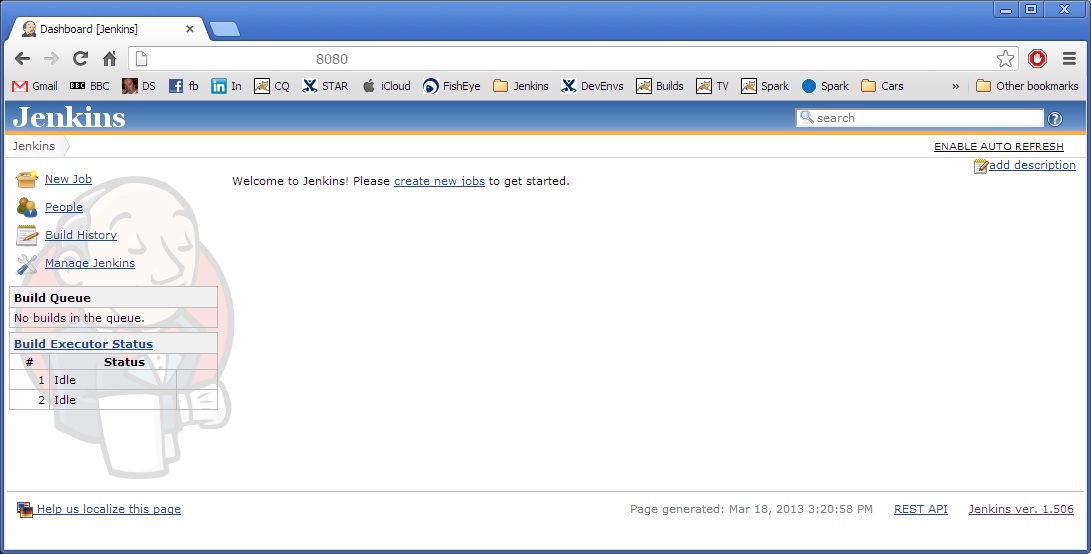

The Linux “screen” command is very useful tool for Minecraft Server Admins – please see this post for details on getting started with it:

Minecraft Server Admin tips – using the Linux screen command

it allows you to manage multiple Minecraft Server Processes and consoles easily, without having to keep multiple sessions open.

Cheers,

Don