This is the second post on Jenkins Pipelines on Kubernetes with Minikube, following on from the initial setup steps here:

That post went as far as having a Kubernetes cluster up and running for local development. That was primarily focused on Mac, but once you reach the point of having a running Kubernetes Cluster with kubectl configured to talk to it, the hosting platform/OS makes little difference.

This second section takes a more detailed look at running Jenkins Pipelines inside the Kubernetes Cluster, and automatically provisioning Jenkins JNLP Agents via Kubernetes, then takes an in-depth look at what we can do with all of that, with a complete working example.

This post covers quite a lot:

- Adding Helm to the Kubernetes cluster for package management

- Deploying Jenkins on Kubernetes with Helm

- Connecting to the Jenkins UI

- Setting up a first Jenkins Pipeline job

- Running our pipeline and taking a look at the results

- What Next

Adding Helm to the Kubernetes cluster for package management

Helm is a package manager for Kubernetes, and like Minikube it is ideal for quickly setting up development environments, plus much more if you want to. Take a look through the Helm hub to see just some of the other things it can do.

On Mac you can use brew to install the local helm component:

brew install helm

and again you can use minikube addons for the k8s cluster side – note that helm v3 removes the requirement for tiller.

minikube addons enable helm-tiller

you should then see a tiller pod start up in your Kubernetes kube-system namespace:

Before you can use Helm we first need to initialise the local Helm client, so simply run:

helm init --client-only

as our earlier minikube addons command has configured the connectivity and cluster already. Before we can use Helm to install Jenkins (or any of the many other things it can do), we need to update the local repo that contains the Helm Charts:

helm repo update

Hang tight while we grab the latest from your chart repositories… …Skip local chart repository …Successfully got an update from the "stable" chart repository Update Complete.

That should be Helm setup complete and ready to use now.

Deploying Jenkins on Kubernetes with Helm

Now that Helm is setup and can speak to our k8s instance, installing 100’s of software packages suddenly becomes very simple – including, Jenkins. We’ll just give the install a friendly name “jenki” and use NodePort to simplify the networking, nothing more is required for this dev setup:

helm install --set serviceType=NodePort --name jenki stable/jenkins

obviously we’re skipping over all the for-real things you may want for a longer lived Jenkins instance, like backups, persistence, resilience, authentication and authorisation etc., but this bare-bones setup is sufficient for now.

Connect to the Jenkins UI

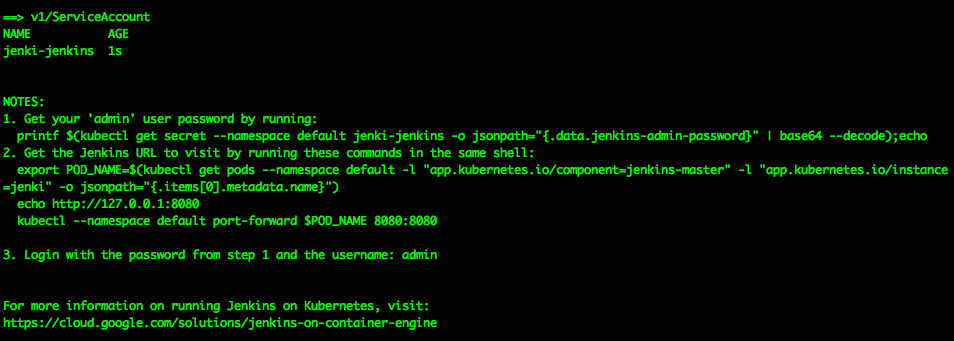

The Helm install should spit out some helpful info like this, explaining how to get the Jenkins Admin password and how to connect to the UI:

- Get your ‘admin’ user password by running:

printf $(kubectl get secret –namespace default jenki-jenkins -o jsonpath=”{.data.jenkins-admin-password}” | base64 –decode);echo - Get the Jenkins URL to visit by running these commands in the same shell:

export POD_NAME=$(kubectl get pods –namespace default -l “app.kubernetes.io/component=jenkins-master” -l “app.kubernetes.io/instance=jenki” -o jsonpath=”{.items[0].metadata.name}”)

echo http://127.0.0.1:8080

kubectl –namespace default port-forward $POD_NAME 8080:8080 - Login with the password from step 1 and the username: admin

For more information on running Jenkins on Kubernetes, visit:

https://cloud.google.com/solutions/jenkins-on-container-engine

looking something like this in the console:

going back to the Kubernetes Dashboard we can now see the “jenki” Jenkins deployment in the default namespace:

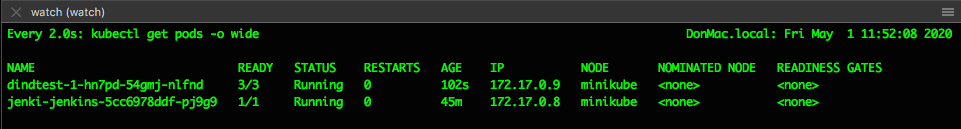

and you can monitor the pods via the console with:

watch kubectl get pods -o wide

Note: I install the useful ‘watch‘ command via brew too, along with the zsh plugin for minikube

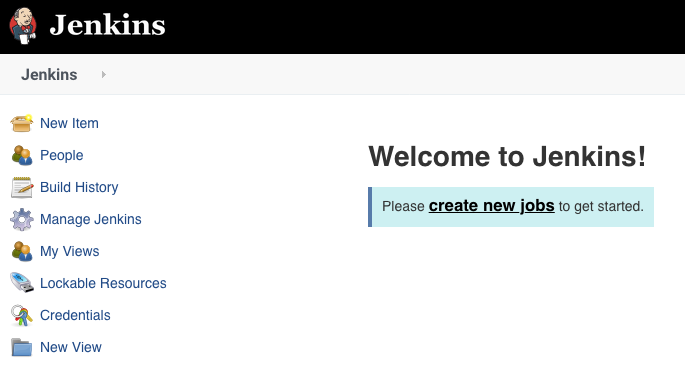

After following the steps to get the admin password and hit the Jenkins URL http://127.0.0.1:8080 in your desktop browser, you should see the familiar “Welcome to Jenkins!” page…

Pause a moment to appreciate that this Jenkins is running in a JVM inside a Docker container on a Kubernetes Pod as a Service in a Namespace in a Kubernetes Instance that’s running inside a Virtual Machine running under a Hypervisor on a host device….

turtles all the way down

there are many things I’ve skipped over here, including looking at storage, auth, security and all the usual considerations but the aim has been to quickly and easily get to this point so we can start developing the pipelines and processes we’re really wanting to focus on.

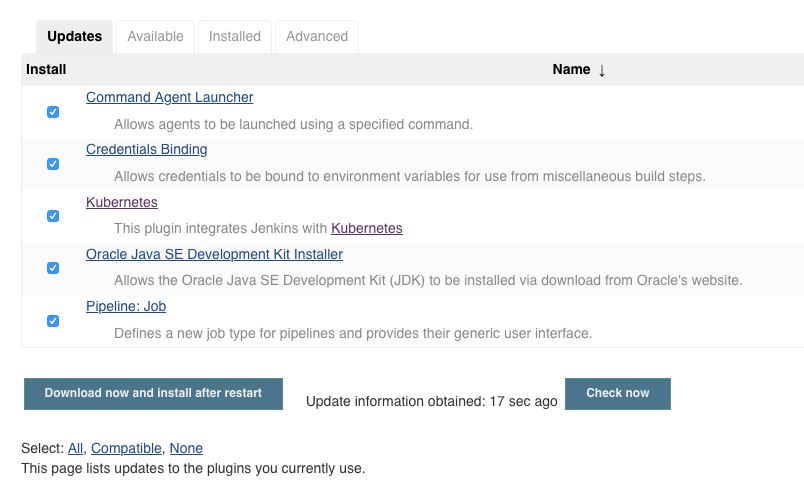

Navigating to Manage Jenkins then Plugins Manager should show some updates already available – this proves we have connectivity to the public Jenkins Update Centre out of the box. The Kubernetes Jenkins plugin is the key thing I’m looking for – select and update if required:

If you go to http://127.0.0.1:8080/configure you should see a link at the foot of the page to the new location for “Clouds”: http://127.0.0.1:8080/configureClouds/ – that should already be configured with sufficient settings for Jenkins to use your Kubernetes cluster, but it’s worthwhile taking a look through the settings and options there. No changes should be required here now though.

Setup a first Jenkins Pipeline job

Create a new Jenkins Pipeline job and add the following settings as shown in the picture below…

In the job config page under “Pipeline”, for “Definition” select “Pipeline script from SCM” and enter the URL of this github project which contains my example pipeline code:

https://github.com/DonaldSimpson/minikube-pipelines.git

everything else can be left as the default, and should look something like this:

This means that your Job will checkout my example repo and run the pipeline Groovy code in the Jenkinsfile, which you can see here:

https://github.com/DonaldSimpson/minikube-pipelines/blob/master/Jenkinsfile

This file has been heavily commented to explain every part of the pipeline and shows what each step is doing. Taking a read through it should show you how pipelines work, how Jenkins is creating Docker Containers for the different Stages, and give you some ideas on how you could develop this simple example further.

Run it and take a look at the results

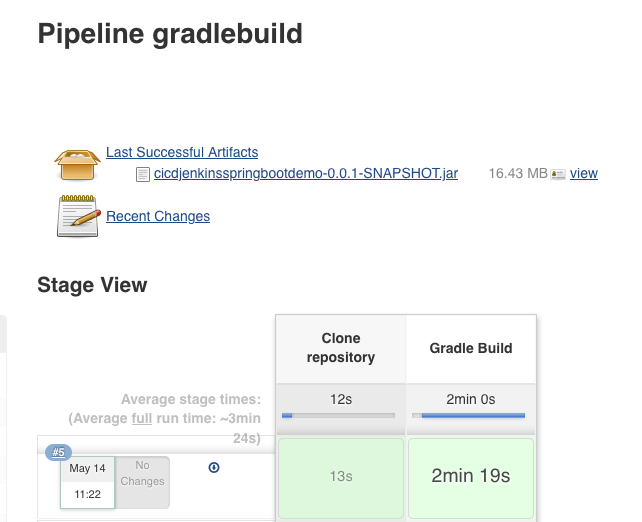

Save and run the job, and you should (eventually) see something like this:

The jobs Console Output will have a ton of info, showing everything from the container images being pulled, the git repo being cloned, the very verbose gradle build output and all the local files.

So in summary, what just happened?

Jenkins connected to Kubernetes via the Kubernetes plugin and its settings

The required Docker images (git and gradle, as specified at the top of the Jenkinsfile pipeline) were pulled from Docker Hub

A git Docker container was started up (as a new pod in k8s) and connected to Jenkins as an Agent using JNLP

A ‘git clone’ was run inside that container to check out the source code from an example repo

A gradle Docker container was started and connected as a Jenkins JNLP Agent, running as another k8s pod

The gradle build stage was run inside that gradle container, using the source files checked out from git in the previous Stage

The newly built JAR file was archived so we could use it later if wanted

The pipeline ends, and k8s will clean up the containers

This pipeline could easily be expanded to run that new JAR file as an application as demonstrated here: https://github.com/AutomatedIT/springbootjenkinspipelinedemo/blob/master/Jenkinsfile#L5, or, you could build a new Docker image containing this version of the JAR file and start that up and test it and so on. You could also automate this so that whenever the source code is changed a build is triggered that does all of this automatically and records the result… hello CI/CD!

What next?

From the above demo you can hopefully see how easy it is to create an end to end pipeline that will automatically provision Jenkins Agents running on Kubernetes for you.

You can use this functionality to quickly and safely develop pipeline processes like the one we have examined, that run across multiple Agents, using each for a particular function/step in your workflow, leaving the provisioning and housekeeping work to the underlying Kubernetes cluster. With this, you can build or pull docker images, run them, test them, start them up as other Jenkins JNLP Agents and so on, all “as code” and all fully automated.

And after all that… ?

Being able to fire up Docker containers and use them as Jenkins Agents running on a Kubernetes platform is extremely powerful in itself, but you can go a step further and start using this setup to build, deploy and manage Kubernetes resources directly, too – from Jenkins Pipelines running on the same Kubernetes Cluster – or even from one Kubernetes to another.

We’ve seen during setup that we can use kubectl to manage the k8s cluster and its components – we can also do that from within containers and stages in our pipelines, wherever they are.

This example project demonstrates just that:

https://github.com/DonaldSimpson/devdoncoin

and contains an example pipeline and supporting files to build, lint, security scan, push to registry, deploy to Kubernetes, run, test and clean up the example “doncoin” application via a Jenkins pipeline running on Kubernetes.

It also includes outlines and suggestions for expanding things even further, in to a more mature and production-ready setup, introducing things like Jenkins shared libraries, linting and testing, automating vulnerability scanning within the pipeline, and so on.

Note the docker containers used there, the kubernetes yaml file and shell script, and the simple container with kubectl inside it.

Cheers,

Don

Discover more from Don's Blog

Subscribe to get the latest posts sent to your email.