Introduction

This is Step 1 in my recent Kubernetes setup where I very quickly describe the process followed to build and configure the basic requirements for a simple Kubernetes cluster.

Step 2 is here https://www.donaldsimpson.co.uk/2018/12/29/kubernetes-from-cluster-reset-to-up-and-running/

and Step 3 where I set up Helm and Tiller and deploy an initial chart to the cluster: https://www.donaldsimpson.co.uk/2019/01/03/kubernetes-adding-helm-and-tiller-and-deploying-a-chart/

The TL/DR

A quick summary should cover 99% of this, but I wanted to make sure I’d recorded my process/journey to get there – to cut a long story short, I ended up using this Ansible project:

https://github.com/DonaldSimpson/ansible-kubeadm

which I forked from the original here:

https://github.com/ben-st/ansible-kubeadm

on the 5 Ubuntu linux hosts I created by hand (the horror) on my VMWare ESX home lab server. I started off writing my own ansible playbook which did the job, then went looking for improvements and found the above fitted my needs perfectly.

The inventory file here: https://github.com/DonaldSimpson/ansible-kubeadm/blob/master/inventory details the addresses and functions of the 5 hosts – 4 x workers and a single master, which I’m planning on keeping solely for master role.

My notes:

Host prerequisites are in my rough notes below – simple things like ssh keys, passwwordless sudo from the ansible user, installing required tools like python, setting suitable ip addresses and adding the users you want to use. Also allocating suitable amounts of mem, cpu and disk – all of which are down to your preference, availability and expectations.

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

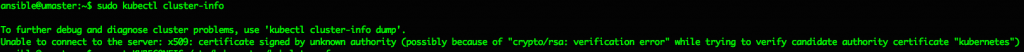

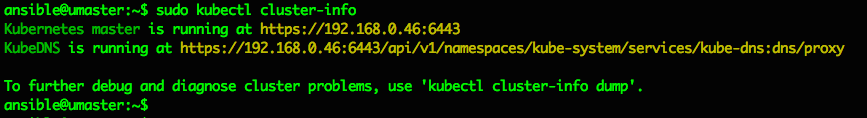

ubuntumaster is 192.168.0.46

su – ansible

check history

ansible setup

https://www.howtoforge.com/tutorial/setup-new-user-and-ssh-key-authentication-using-ansible/

1 x master - sudo apt-get install open-vm-tools-desktop - sudo apt install openssh-server vim whois python ansible - export TERM=linux re https://stackoverflow.com/questions/49643357/why-p-appears-at-the-first-line-of-vim-in-iterm

- /etc/hosts:

127.0.1.1 umaster

192.168.0.43 ubuntu01

192.168.0.44 ubuntu02

192.168.0.45 ubuntu03

// slave nodes need:ssh-rsa AAAAB3NzaC1y<snip>fF2S6X/RehyyJ24VhDd2N+Dh0n892rsZmTTSYgGK8+pfwCH/Vv2m9OHESC1SoM+47A0iuXUlzdmD3LJOMSgBLoQt ansible@umaster

added to root user auth keys in .ssh and apt install python ansible -y

//apt install python ansible -y

useradd -m -s /bin/bash ansible

passwd ansible <type the password you want>

echo -e ‘ansible\tALL=(ALL)\tNOPASSWD:\tALL’ > /etc/sudoers.d/ansibleecho -e 'don\tALL=(ALL)\tNOPASSWD:\tALL' > /etc/sudoers.d/don

mkpasswd --method=SHA-512 <type password "secret">

Password:

$6$dqxHiCXHN<snip>rGA2mvE.d9gEf2zrtGizJVxrr3UIIL9Qt6JJJt5IEkCBHCnU3nPYH/

su - ansible

ssh-keygen -t rsa

cd ansible01/

vim inventory.ini

ansible@umaster:~/ansible01$ cat inventory.ini

[webserver]

ubuntu01 ansible_host=192.168.0.43

ubuntu02 ansible_host=192.168.0.44

ubuntu03 ansible_host=192.168.0.45

ansible@umaster:~/ansible01$ cat ansible.cfg

[defaults]

inventory = /home/ansible/ansible01/inventory.ini

ansible@umaster:~/ansible01$ ssh-keyscan 192.168.0.43 >> ~/.ssh/known_hosts

# 192.168.0.43:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.43:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.43:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

ansible@umaster:~/ansible01$ ssh-keyscan 192.168.0.44 >> ~/.ssh/known_hosts

# 192.168.0.44:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.44:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.44:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

ansible@umaster:~/ansible01$ ssh-keyscan 192.168.0.45 >> ~/.ssh/known_hosts

# 192.168.0.45:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.45:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

# 192.168.0.45:22 SSH-2.0-OpenSSH_7.6p1 Ubuntu-4

ansible@umaster:~/ansible01$ cat ~/.ssh/known_hosts

or could have donefor i in $(cat list-hosts.txt)

do

ssh-keyscan $i >> ~/.ssh/known_hosts

done

cat deploy-ssh.yml

—

– hosts: all

vars:

– ansible_password: ‘$6$dqxHiCXH<kersnip>l.urCyfQPrGA2mvE.d9gEf2zrtGizJVxrr3UIIL9Qt6JJJt5IEkCBHCnU3nPYH/’

gather_facts: no

remote_user: root

tasks:

– name: Add a new user named provision

user:

name=ansible

password={{ ansible_password }}

– name: Add provision user to the sudoers

copy:

dest: “/etc/sudoers.d/ansible”

content: “ansible ALL=(ALL) NOPASSWD: ALL”

– name: Deploy SSH Key

authorized_key: user=ansible

key=”{{ lookup(‘file’, ‘/home/ansible/.ssh/id_rsa.pub’) }}”

state=present

– name: Disable Password Authentication

lineinfile:

dest=/etc/ssh/sshd_config

regexp=’^PasswordAuthentication’

line=”PasswordAuthentication no”

state=present

backup=yes

notify:

– restart ssh

– name: Disable Root Login

lineinfile:

dest=/etc/ssh/sshd_config

regexp=’^PermitRootLogin’

line=”PermitRootLogin no”

state=present

backup=yes

notify:

– restart ssh

handlers:

– name: restart ssh

service:

name=sshd

state=restarted

// end of the above file

ansible-playbook deploy-ssh.yml –ask-pass

results inLAY [all] *********************************************************************************************************************************************************************************************************************************************************************

TASK [Add a new user named provision] ******************************************************************************************************************************************************************************************************************************************

fatal:

[ubuntu02]

: FAILED! => {"msg": "to use the 'ssh' connection type

with passwords, you must install the sshpass program"}

for each node/slave/hostsudo apt-get install -y sshpass

ubuntu01 ansible_host=192.168.0.43

ubuntu02 ansible_host=192.168.0.44

ubuntu03 ansible_host=192.168.0.45

kubernetes setup

https://www.techrepublic.com/article/how-to-quickly-install-kubernetes-on-ubuntu/run install_apy.yml against all hosts and localhost too

on master:

kubeadm init

results in:root@umaster:~# kubeadm init

[init] using Kubernetes version: v1.11.1

[preflight] running pre-flight checks

I0730 15:17:50.330589 23504 kernel_validator.go:81] Validating kernel version

I0730 15:17:50.330701 23504 kernel_validator.go:96] Validating kernel config

[WARNING SystemVerification]: docker version is greater than the most

recently validated version. Docker version: 17.12.1-ce. Max validated

version: 17.03

[preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`

root@umaster:~#

doswapoff -a then try again

kubeadm init… wait for images to be pulled etc – takes a while

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.0.46:6443 --token 9e85jo.77nzvq1eonfk0ar6 --discovery-token-ca-cert-hash sha256:61d4b5cd0d7c21efbdf2fd64c7bca8f7cb7066d113daff07a0ab6023236fa4bc

root@umaster:~#

Next up…

The next post in the series is here: https://www.donaldsimpson.co.uk/2018/12/29/kubernetes-from-cluster-reset-to-up-and-running/ and details an automated process to scrub my cluster and reprovision it (form a Kubernetes point of view – the hosts are left intact).