MLOps for DevOps Engineers – MiniLM & MLflow pipeline demo

As a DevOps and SRE engineer, I’ve spent a lot of time building automated, reliable pipelines and cloud platforms. Over the last couple of years, I’ve been applying the same principles to machine learning (ML) and AI projects.

One of those projects is CarHunch, a vehicle insights platform I developed. CarHunch ingests and analyses MOT data at scale, using both traditional pipelines and applied AI. Building it taught me first-hand how DevOps practices map directly onto MLOps: versioning datasets and models, tracking experiments, and automating deployment workflows. It’a a new and exciting area but the core idea is very much the same, with some interesting new tools and concepts added.

To make those ideas more approachable for other DevOps engineers, I have put together a minimal, reproducible demo using MiniLM and MLflow.

You can find the full source code here:

github.com/DonaldSimpson/mlops_minilm_demo

The quick way: make run

The simplest way to try this demo is with the included Makefile; that way all you need is Docker installed

# clone the repo

git clone https://github.com/DonaldSimpson/mlops_minilm_demo.git

cd mlops_minilm_demo

# build and run everything (training + MLflow UI)

make run

That one ‘make run’ command will:

- – Spin up a containerised environment

- – Run the demo training script (using MiniLM embeddings + Logistic Regression)

- – Start the MLflow tracking server and UI

Here’s a quick screngrab of it running in the console:

Once it’s up & running, open

http://localhost:5001

in your browser to explore logged experiments

What the demo shows

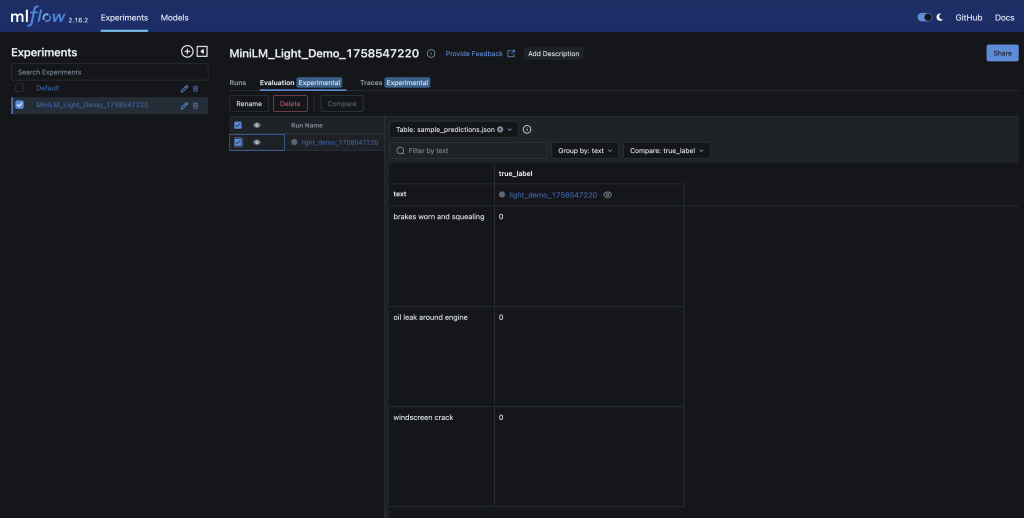

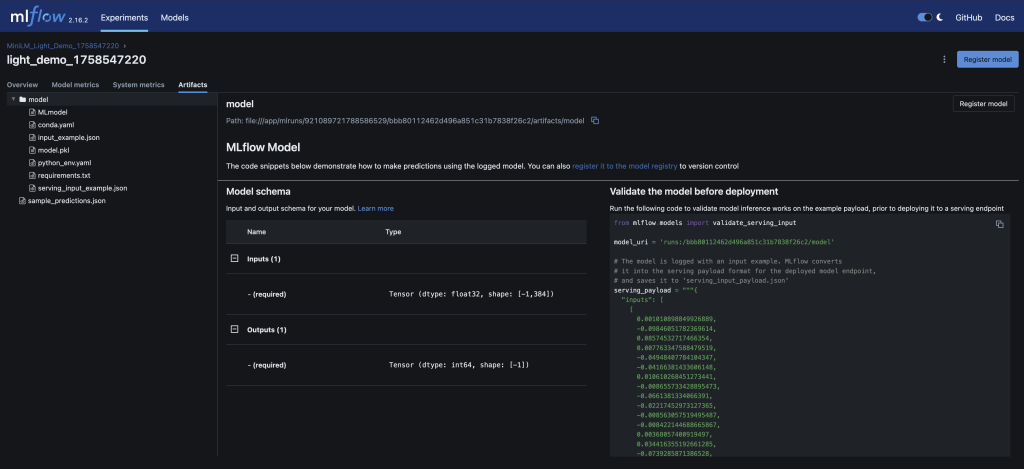

– MiniLM embeddings turn short MOT-style notes (e.g. “brakes worn”) into vectors

– A Logistic Regression classifier predicts pass/fail

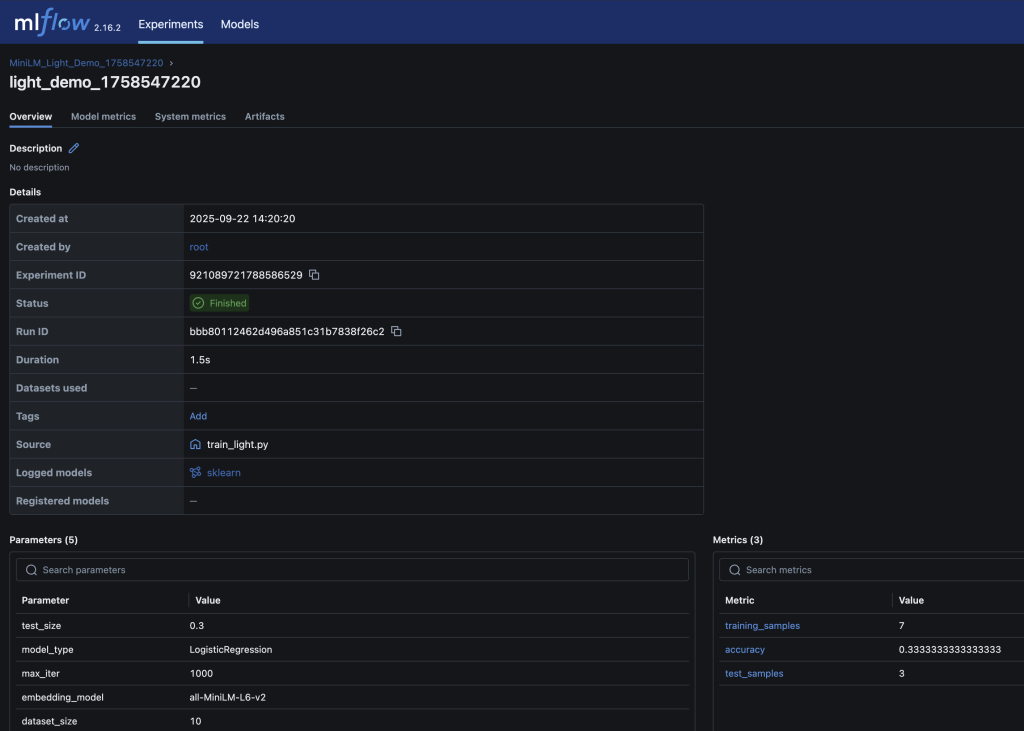

– Parameters, metrics (accuracy), and the trained model are logged in MLflow

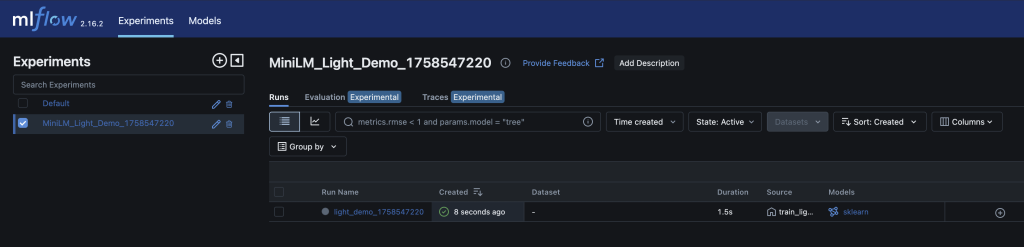

– You can inspect and compare runs in the MLflow UI – just like you’d review builds and artifacts in CI/CD

– Run detail; accuracy metrics and model artifact stored alongside parameters

Here are screenshots of the relevant areas from the MLFlow UI:

Why this matters for DevOps engineers

-

- Familiar workflows: MLflow feels like Jenkins/GitHub Actions for models – every run is logged, reproducible, and auditable

-

- Quality gates: just as builds pass/fail CI, models can be gated by accuracy thresholds before promotion

-

- Reproducibility: datasets, parameters and artifacts are versioned and tied to each run

-

- Scalability: the same demo pattern can scale to real workloads – this is a scaled down version of my local process

Other ways to run it

If you prefer, the repo includes alternatives:

-

- Python venv: create a virtualenv, install

requirements.txt, runtrain_light.py

- Python venv: create a virtualenv, install

-

- Docker Compose: build and run services with

docker-compose up --build

- Docker Compose: build and run services with

-

- Make targets:

make train_light(quick run) ormake train(full run)

- Make targets:

These are useful if you want to dig a little deeper and see exactly what’s happening

Next steps

Once you’re comfortable with this small demo, natural extensions are:

-

- – Swap in a real dataset (e.g. DVLA MOT data)

-

- – Add data validation gates (e.g. Great Expectations)

-

- – Introduce bias/fairness checks with tools like Fairlearn

-

- – Run the pipeline in Kubernetes (KinD/Argo) for reproducibility

-

- – Hook it into GitHub Actions for end-to-end CI/CD

Closing thoughts

DevOps and MLOps share the same DNA: versioning, automation, observability, reproducibility. This demo repo is a small but practical bridge between the two

Working on CarHunch gave me the chance to apply these ideas in a real platform. This demo distills those lessons into something any DevOps engineer can try locally.

Try it out at github.com/DonaldSimpson/mlops_minilm_demo and let me know how you get on

Discover more from Don's Blog

Subscribe to get the latest posts sent to your email.

One thought on “MLOps for DevOps Engineers – MiniLM & MLflow demo”