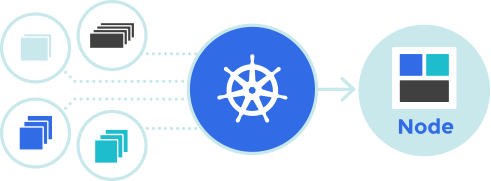

This is Step 2 in a series of Kubernetes blog posts

Step 1 covers the initial host creation and basic provisioning with Ansible: https://www.donaldsimpson.co.uk/2019/01/03/kubernetes-setting-up-the-hosts/

and Step 3 is where I set up Helm and Tiller and deploy an initial chart to the cluster: https://www.donaldsimpson.co.uk/2019/01/03/kubernetes-adding-helm-and-tiller-and-deploying-a-chart/

These are notes on going from a freshly reset kubernetes cluster to a running & healthy cluster with a pod network applied and worker nodes connected.

To get to this starting point I provisioned 4 Ubuntu hosts (1 master & 3 workers) on my VMWare server – a Dell Poweredge R710 with 128GB RAM.

I then used this Ansible project:

https://github.com/DonaldSimpson/ansible-kubeadm

to configure the hosts and prep for Kubernetes with kubeadm:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

I’ll write about this in more detail in another post…

Please note that none of this is production grade or recommended, it’s simply what I have done to suit my needs in my home lab. My focus is on automating Kubernetes processes and deployments, not creating highly available bullet-proof production systems.

To reset and restore a ‘new’ cluster, first on the master instance – reboot and as a normal user (I’m using an “ansible” user with sudo throughout):

sudo kubeadm reset

(y)

sudo swapoff -a

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

I’m passing that CIDR address as I’m using Flannel for pod networking (details follow) – if you use something else you may not need that, but may well need something else.

That should be the MASTER started, with a message to add nodes with:

kubeadm join 192.168.0.46:6443 --token 9w09pn.9i9uu1ht8gzv36od --discovery-token-ca-cert-hash sha256:4bb0bbb1033a96347c6dd888c769ec9c5f6caa1b699066a58720ffdb97a0f3d7

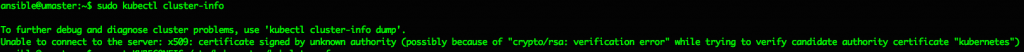

which all sounds good, but the first most basic check produces the following error:

ansible@umaster:~$ kubectl cluster-info

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

which I think is due to the kubeadm reset cleaning up the previous config, but can be easily fixed with this:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

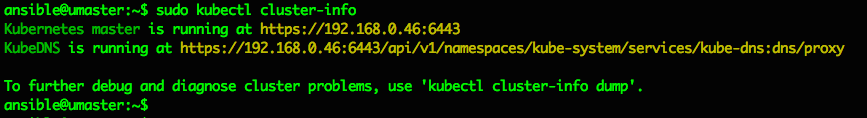

then it works and MASTER is up and running ok:

ansible@umaster:~$ sudo kubectl cluster-info

Kubernetes master is running at https://192.168.0.46:6443

KubeDNS is running at https://192.168.0.46:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

————- ADD NODES ——————

Use the command and token provided by the master on the worker node(s) (in my case that’s “ubuntu01” to “ubuntu04”). Again I’m running as the ansible user everywhere, and I’m disabling swap and doing a kubeadm reset first as I want this repeatable:

sudo swapoff -a

sudo kubeadm reset

sudo kubeadm join 192.168.0.46:6443 --token 9w09pn.9i9uu1ht8gzv36od --discovery-token-ca-cert-hash sha256:4bb0bbb1033a96347c6dd888c769ec9c5f6caa1b699066a58720ffdb97a0f3d7

I think the token expires after a few hours. If you want to get a new one you can query the Master using:

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-token/

Or, as I’ve just found out, the more recent versions ok k8s provide “kubeadm token create –print-join-command”, which provide output like the following example that you can save to a file/variable/whatever:

kubeadm join 192.168.0.46:6443 --token 8z5obf.2pwftdav48rri16o --discovery-token-ca-cert-hash sha256:2fabde5ad31a6f911785500730084a0e08472bdcb8cf935727c409b1e94daf44

I believe options to specify json or alternative output formatting is in the works too.

That’s all that is needed, if you’ve not used this node already it may take a while to pull things in but if you have it should be pretty much instant.

When ready, running a quick check on the MASTER shows the connected node (ubuntu01) and the Master (umaster) and their status:

ansible@umaster:~$ sudo kubectl get nodes --all-namespaces

NAME STATUS ROLES AGE VERSION

ubuntu01 NotReady <none> 27s v1.13.1

umaster NotReady master 8m26s v1.13

The NotReady status is because there’s no pod network available – see here for details and options:

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/#pod-network

so apply a pod network (I’m using flannel) like this on the Master only:

ansible@umaster:~$ sudo kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/bc79dd1505b0c8681ece4de4c0d86c5cd2643275/Documentation/kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

Then check again and things should look better now they can communicate…

ansible@umaster:~$ sudo kubectl get nodes --all-namespaces

NAME STATUS ROLES AGE VERSION

ubuntu01 Ready <none> 2m23s v1.13.1

umaster Ready master 10m v1.13.1

ansible@umaster:~$

Adding any number of subsequent nodes is very easy and exactly the same (the pod networking setup is a one-off step on the master only). I added all 4 of my worker vms and checked they were all Ready and “schedulable”. My server coped with this no problem at all. Note that by default you can’t schedule tasks on the Master, but this can be changed if you want to.

That’s the very basic “reset and restore” steps done. I plan to add this process to a Jenkins Pipeline, so that I can chain a complete cluster destroy/reprovision and application build, deploy and test process together.

The next steps I did were to:

- install the Kubernetes Dashboard to the cluster

- configure the Kubernetes Dashboard and fix permissions

- deploy a sample application, replicaset & service and expose it to the network

- configure Heapster

which I’ll post more on soonish… and I’ll add the precursor to this post on the host provisioning and kubeadm setup too.